Nuances of development for Apple Vision Pro

Our Unity team is working on developing VR projects. Recently, Apple entered this market with a new device – the Vision Pro. We’ll talk about it in today’s article.

- About Apple Vision Pro

- Nuances of development for Apple Vision Pro

- Voice recognition

- The use of shaders

- User input

- Animations

- Sound

- Triggers

- Receive data about the real world

- Occlusion in Mixed Reality

- Working with application state

- Interface of interaction

- Controllers

- Documentation

- Apple Vision Pro Headset Requirements and Features

- Pros of Apple Vision Pro

Our Unity team develops VR projects. As a rule, the main platform for which we develop is Oculus Quest (Meta Quest). We do most of the work for Oculus Quest 2 and 3 headsets. We use Unity as the main engine for creating applications. It is well suited for mobile development, including Oculus Quest.

Image 1 – Vision Pro и Oculus Quest

And now Apple has entered the virtual reality headset market with Vision Pro — a separate, unique device with its own visionOS operating system. It turns out that this is a completely new platform to develop for. Unity wants to keep up with the times, so together with Apple they have prepared tools so that developers can already create applications. For sure, there have been difficulties and nuances, which we are going to tell you about below.

It is worth noting that Apple Vision Pro is quite an expensive device and it was released quite recently. Few specialists have the opportunity to develop for this headset — we decided to take advantage of this opportunity among the first.

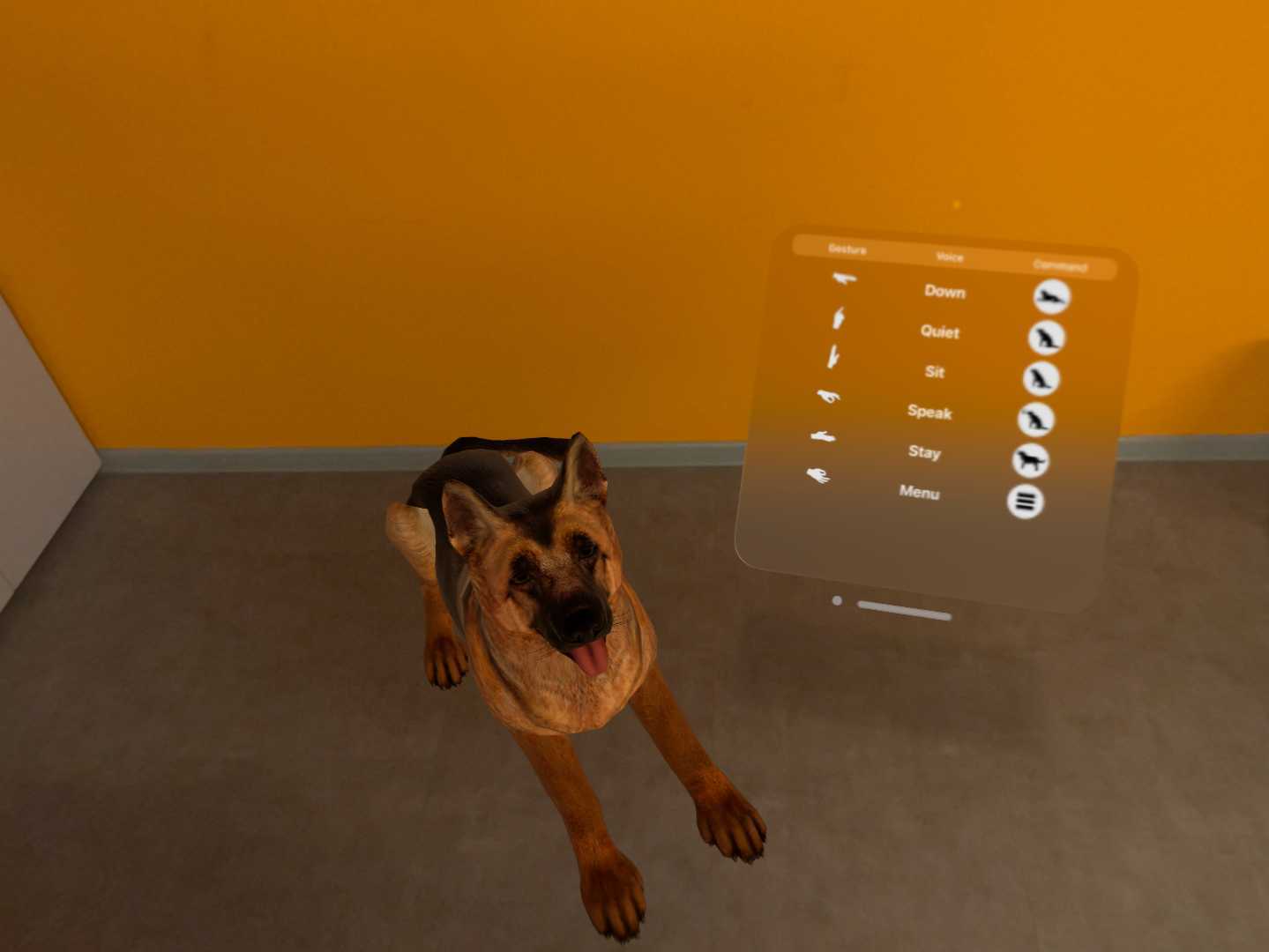

We will tell you about the nuances of development for Apple Vision Pro using the example of our mixed reality application Dog MR, originally created for Meta* Quest. At the end of the article, we will describe in more detail the requirements of Apple Vision Pro for the main programs and tools.

Image 2 – Dog MR

About Apple Vision Pro

Both Apple Vision Pro and the system have 3 types of applications:

- Windowed — applications that run in a flat window. In fact, these are regular iOS applications.

- Fully Immersive VR — a full-fledged virtual reality application in which the user sees only the virtual world and interacts exclusively with it.

- PolySpatial MR — an augmented reality application in which the user sees both the real world and virtual objects.

It is important to note that the application can only be of one type — it is specified in the project settings and cannot be changed at runtime.

Nuances of development for Apple Vision Pro

Voice recognition

One of the requirements was the ability to work without the Internet. For voice recognition, we used a third-party library available on various platforms. It uses a local database and can work without an Internet connection.

This library does not work on Apple Vision because author has not yet prepared a release for this platform, and there are no alternatives at the moment. To implement this feature, we decided to use the native speech recognition library (the same one used for Siri).

However, its use has led to several problems. One of them is that at the moment the helmet is focused on the American market. Therefore, the native speech recognition library only supports English.

The second problem was that Unity handles system events of minimizing/closing the application incorrectly, which is why the native speech recognition library breaks. So far, we have not been able to find a solution to this problem, so we are waiting for updates from Unity and Apple.

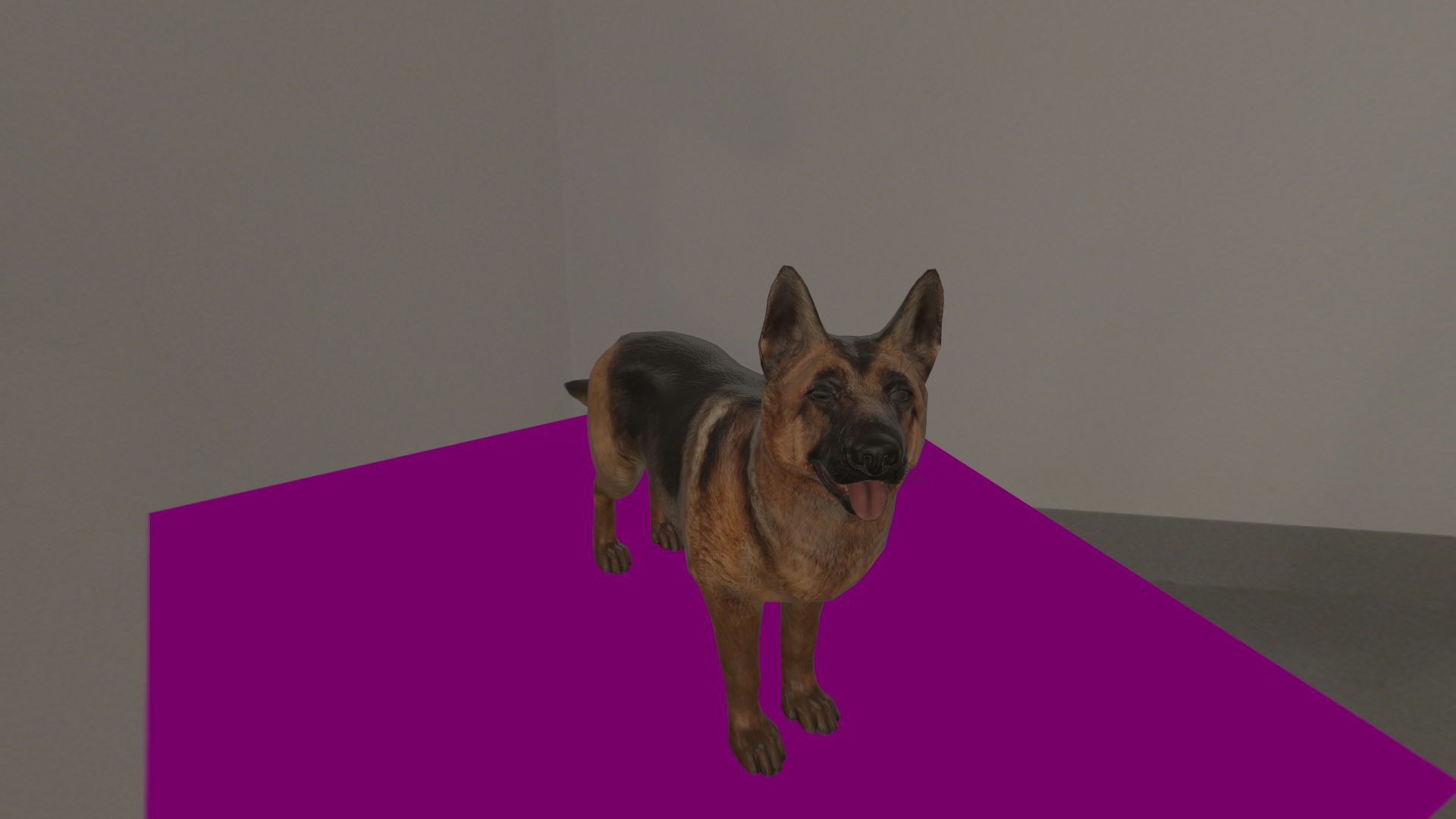

The use of shaders

To configure the visual properties of objects in engines such as Unity they use shaders – these are microprograms that are responsible for how the object is drawn on the screen. They can be divided into two groups: built-in (supplied as part of the engine) and custom (written or modified directly by us). Custom shaders, in turn, can be divided into two groups by the method of their creation: written in code, and created using the visual programming tool Shader Graph.

Ultimately, both shaders are compiled into the appropriate low-level code for the appropriate platform during the build. This process is entirely engine-dependent and is almost impossible to influence.

During the development process, it turned out that some features of the built-in shaders are not compiled correctly. And the objects are visually significantly different from what we expect to see. For example, there are problems with the interpretation of the Metallic/Roughness texture, as well as with transparent objects of the Fade or Transparent types. In our case, the material on the dog is not displayed as beautifully as on other platforms. And, unfortunately, it is quite difficult or even impossible to fix this seemingly obvious problem.

Image 3 – Issues with transparent shader

There is a mandatory requirement for custom shaders. They must be created using the aforementioned Shader Graph tool. Since we were migrating an existing project that already had custom shaders, we had to rewrite them to this system. If there are many of them in the project, this can be a problem, since migrating to the Shader Graph format is not always easy.

User input

Apple Vision uses gaze tracking and hand gesture tracking to control the input system. In particular, a short pinch is used to click. Unity has a special Input Module to control input, which is a layer between the OS system input and our application.

Currently, visionOS support in the existing Unity Input Module is very limited. Therefore, most of the standard things that usually work by default have to be created from scratch. And the lack of detailed documentation and open source codes greatly complicate the process.

Fun fact: seemingly trivial tasks such as positioning the UI panel and its orientation to the user require the use of special API commands.

Animations

Our project has an animated character — a dog. In modern engines, in particular, in Unity, there is a system for optimizing such objects. When the character is out of the camera’s field of view, animations are recalculated and updated only partially to free up CPU and GPU resources. When the object is in the field of view again, the animations start working in full. Usually, this optimization is enabled by default.

Due to the specifics of the camera rig in Apple Vision, Unity cannot correctly determine whether the object is in the field of view or not. Because of this, animated characters look incorrect. Disabling optimization solves the problem. In our case, there was only one animated character, so this solution is acceptable. But there may be situations when there are many such objects and this problem can become very serious.

Sound

In 3D games, there is a problem with positioning sounds in three-dimensional space. Ideally, on hearing a sound the player/user should understand where it comes from, from what distance, feel the features imposed by the current environment (a small room, an open space, a cave, etc.). In each case, the same sound should be heard differently. In VR applications, this is especially important.

Image 4

In Unity, the system responsible for 3D sound is called Spatialized Sound System. There is also the ability to use third-party systems developed for specific platforms. Meta* Quest, for example, has its own Spatialized Sound System.

At the moment, Apple has not yet developed its own system and relies on the built-in one from Unity. On the device, this system does not work correctly – sometimes the sound is distributed incorrectly. For example, we hear the voice of our dog on the left, although in the application it should go on the right. So far, this problem has not been solved and we are waiting for updates and fixes from Unity and Apple.

Triggers

To recognize the intersection of certain volumes in game engines, there is a special tool – triggers. In our project, triggers are used to make the dog close its mouth when the user puts their hand to its muzzle. This works with the help of physical spheres around the animal’s head. When the hand enters the sphere, the trigger is triggered and the dog closes its mouth so that the user does not feel the threat of a bite.

Image 5 – The dog wants to bite the finger

Triggers are not supported in Apple Vision, which creates a problem. One solution is to check the distance from the hand to the head in each frame, but this is inefficient. In turn, the Unity physics engine is optimized to quickly execute many triggers. In our case, they were used in one place, so we removed them and checked the distance from the hand to the dog each time.

Receive data about the real world

To make the experience of presence in augmented reality effective, interesting and realistic, it is important for us to receive data about the real world in the application so that virtual objects can correctly interact with existing ones. For example, we plan to place a virtual object on a real table or want it to physically collide with the walls of a real room. The main type of such data is the scanned environment in the form of triangles (“meshes”).

The type of the object can be additional information: a table, a chair, etc.

The helmet uses cameras and Lidar to collect and process such data. The information is then transmitted to Unity, and then we can use it in our application.

Image 6

Apple Vision Pro has a number of features that relate to how and what data it transmits to Unity. The first and most important difference is that the data transfer generation occurs in real time. Information about the “meshes” is constantly updated and they change. And for such objects in the engine, collisions cannot be applied. Accordingly, we cannot create, for example, a virtual ball that will bounce off real walls.

Another problem, indirectly related to the first, is that the dynamic generation and update of the environment data does not allow us to mark the room and indicate where and what object is located so that our dog can understand the arrangement of objects.

Occlusion in Mixed Reality

Occlusion in Mixed Reality is the process by which objects closer to the viewer overlap those that are further away. This allows for a believable interaction between virtual and real objects. For example, if you wave your hand in front of the camera, a 3D object located further away will not be displayed on top of it.

In MR headsets, we render virtual objects over the real-world image received from the device’s camera. If occlusion systems are not enabled, all digital objects will be displayed over existing ones, regardless of their location. For example, a dog will not be covered by a table.

Meta* Quest uses the Depth API plugin, which, using a depth camera, allows real objects to be correctly displayed in front of virtual ones. Apple Vision technology allows for excellent overlapping of windowed applications, but, unfortunately, does not work at all in full-size MR mode.

Unity for Apple Vision uses ARFoundation, which creates planes based on camera data (the floor, the table, the wall, etc.). We can hang a special material on them that makes objects invisible, but does not allow objects behind them to be rendered.

However, due to the dynamic generation of planes, recalculations may occur. Because of this, for example, when we attach a flag to a plane and come closer, part of it may intersect with a real wall.

Image 7 – The flag sank into the wall.

And for the hands, we used a life hack: we used a Unity package that allows you to track changes in hand gestures, set up movements for the 3D hand model and used the same materials on it that we applied to the planes.

Working with application state

There is a bug in Apple Vision: when you click the cross in the status bar to close the full mixed reality application (unbounded), the menu windows remain on the screen, turning gray and inactive, and the application simply collapses. At the same time, bounded applications close completely. This creates inconvenience for users: when you re-launch the application, instead of starting “from scratch”, we see the same state as before the exit.

Interface of interaction

On Oculus Quest 3, we initially implemented the ability to give commands to a dog using voice and gestures. The implementation did not imply a transition to new platforms and therefore used gestures provided by Meta* in the SDK.

Image 8 – Gesture interaction

When Apple Vision came out, we had to redesign the control system. We switched to a solution provided by Unity, which allows integrating the hand control algorithm proposed by Apple into the one we see in Unity. This allowed us to create a more universal and flexible system that can work on different devices. This way, we ensured the compatibility of our application with new technologies and devices, while maintaining the convenience of control for the user.

Controllers

In another project of ours, developed for Oculus Quest, we used controllers. They allowed the user to interact with virtual objects: pull levers, rotate objects, and perform other actions. Controllers provided a convenient and precise way to interact with the virtual environment.

However, Apple Vision Pro does not have controllers. This creates a challenge: we need to develop a way for the user’s hand to virtually “grab” an object. To solve this problem, we will have to create our own interaction system. It must take into account the specifics of Apple Vision and use the capabilities of Unity to integrate hand control.

This requires developing new methods for handling gestures that will interpret and turn them into actions in the virtual environment. Thus, the transition from Oculus Quest controllers to touchless interactions in Apple Vision Pro requires significant changes to our interaction system, but opens up new opportunities for creating a more natural user experience.

Documentation

Since the visionOS platform is quite young, the documentation for both the system itself and the development tools associated with it does not always contain all the necessary information. Many things have to be studied and understood based on available, including third-party, examples, thus often engaging in reverse engineering.

Apple Vision Pro Headset Requirements and Features

- To compile and build a project, you need a Mac with an M1, M2, M3 processor (Apple silicon).As with regular iOS applications, an XCode project can be built in Unity even on Windows, but you need to have MacOS on an Apple Silicon processor to build the application itself and run it on the device. Unity Cloud Build is a service from Unity for automating the assembly and deployment of applications. At the moment, they use Mac computers with Intel processors. Therefore, UCB does not yet support building projects for visionOS.

- A Unity Pro license is required for development.Unity uses the PolySpatial plugin, which is only available in the Pro version, to work with visionOS. If the Personal version is installed on the computer, the plugin is automatically deleted and the project cannot be launched. In addition, visionOS does not support Splash Screen, which cannot be disabled in the Personal version.

- Unity version from 2022.3.19f1 and a new version of Xcode that supports visionOS SDK.

Pros of Apple Vision Pro

- TestFlight is used for distribution, as for any iOS application

- You can use native Swift UI

This is a UI that is provided directly by Apple. It is written in Swift. In fact, we have the ability to open native windows directly from Unity, move, resize and close them using standard system controls.

But to support working with these windows, we had to write our own small wrapper module that allows you to receive and process events from UI elements of the window. Our application has a settings menu and tips implemented by using Swift UI.

This UI is developed using native Apple tools, assembled in Xcode, and placed in Unity as a plugin.

Native windows look like frosted glass. All objects behind them are blurred, even if they are virtual, created in our Unity application.